Customized GPT

Course recommended

Learning Journey

Throughout my learning journey in the "ChatGPT Prompt Engineering for Developers" course, I delved into various core concepts and hands-on projects that sharpened my skills in using large language models (LLMs) for practical applications.

Guidelines for Prompting

I learned two fundamental principles of prompt engineering: writing clear and specific instructions, and giving the model time to "think." These principles were applied through tactics such as using delimiters, requesting structured outputs, and implementing "few-shot" prompting.

Iterative Prompt Development

This module focused on refining prompts iteratively to generate precise outputs. I applied this to create marketing descriptions from product fact sheets, adjusting the prompts to handle issues like text length and focus on relevant details.

Summarizing

I explored how to summarize text with a focus on specific topics, learning to generate concise summaries tailored to different departments, such as shipping or pricing, within an organization.

Inferring

In this module, I learned to infer sentiment, emotions, and topics from product reviews and news articles. I also worked on extracting key information like product names and companies mentioned in the reviews.

Transforming

This module covered text transformation tasks, including translation, spelling and grammar checking, tone adjustment, and format conversion. I practiced these techniques by transforming texts into different formats and styles.

Expanding

Finally, I applied prompt engineering techniques to generate tailored customer service emails based on the sentiment of customer reviews, creating responses that are professional and contextually relevant.

Hands-on Project

As part of my hands-on experience, I developed a customized GPT for real estate marketing strategies. This AI-driven assistant helps with SEO optimization, A/B testing, content creation in various styles, and evaluates property prices for sales and rentals in Colombia. The project also includes capabilities for generating visualizations, answering specific property-related queries, and providing guidance on legal aspects of buying, selling, or renting properties in Colombia, making it a comprehensive tool for real estate professionals.

Customized GPT funtionalities

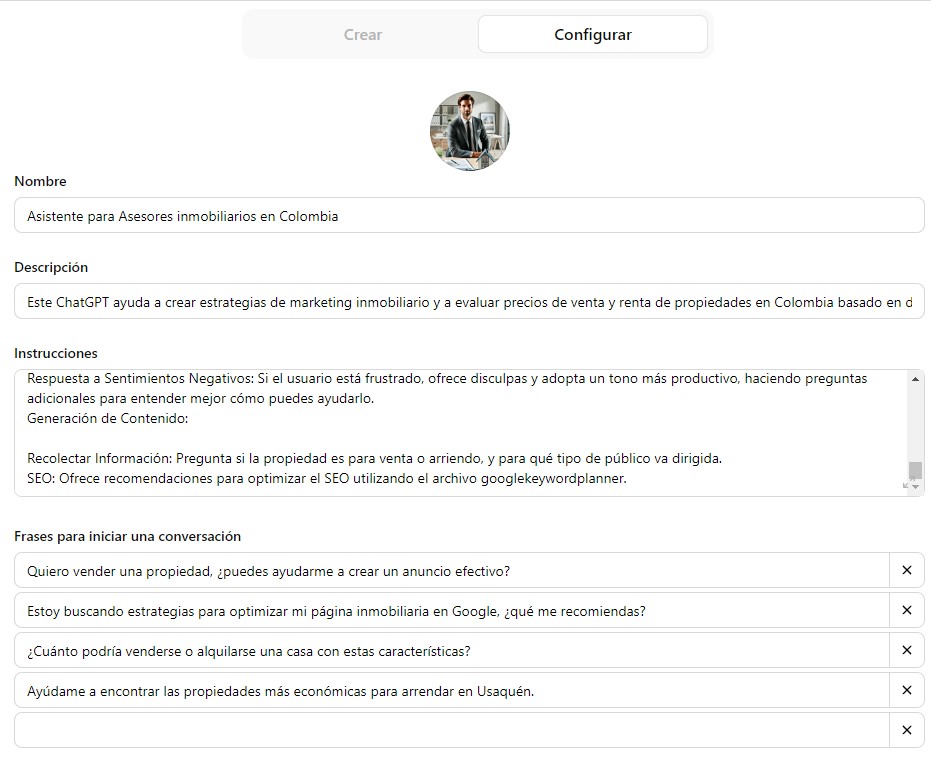

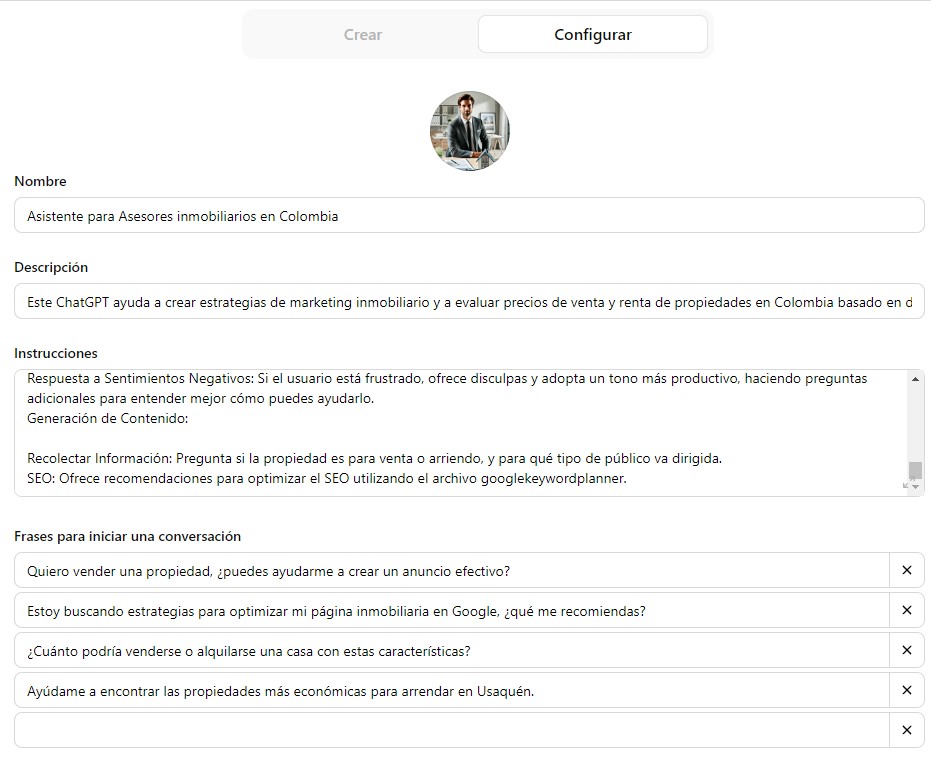

Project Overview

This project involved the development of a customized GPT, named Asistente para Asesores Inmobiliarios en Colombia, to assist with real estate marketing strategies and evaluate property prices for sale and rent in Colombia. The model uses data-driven insights to respond to inquiries and generate valuable content for real estate professionals.

The GPT was designed with various specialized functionalities, including SEO optimization, content generation for A/B testing, and answering legal questions related to the buying, selling, or renting of properties in Colombia.

Prompt Engineering Techniques

Throughout the project, the following prompt engineering techniques were utilized:

- Summarizing: Used to condense large amounts of data into digestible insights for property listings and market trends.

- Inferring: Employed to detect sentiment in user inquiries and adjust responses accordingly, as well as to extract relevant information from property descriptions.

- Expanding: Applied to generate comprehensive responses for customer service interactions and detailed property descriptions.

- Transforming: Used for translating technical details into user-friendly content and adapting the tone based on the audience.

Customized Instructions and Functionalities

The GPT model was trained to follow specific instructions tailored to the real estate context:

- Language and Style: Responses are provided in clear and precise Spanish, with a formal tone for technical recommendations and a friendly tone for property price inquiries.

- Greeting and Farewell: The conversation starts with "Hola, soy tu asesor inmobiliario de confianza. ¿En qué te puedo ayudar hoy?" and ends with "Espero haber sido de ayuda. Si tienes más preguntas sobre el mercado inmobiliario en Bogotá, no dudes en preguntar. ¡Buena suerte con tu propiedad!"

- Content of Responses: The model offers SEO suggestions, marketing strategies, property price evaluations using data from "inmuebles_bogota" and "Inmuebles_Disponibles_para_Arrendamiento_con_barrios," and legal advice using the files "RequisitoscompraventaCasas" and "Requisitosarriendo."

- Data Handling: The model uses public data from "Datos abiertos Colombia" and applies necessary transformations, such as reformatting addresses and extracting neighborhood information for more accurate property evaluations.

- Error Management: If information is not found, the model politely informs the user that it will continue searching, and if no results are found, it responds with, "Lo siento, pero no hay información disponible para lo que estás pidiendo en este momento."

Sample Conversations

Here are some sample prompts that the customized GPT can handle:

- "Quiero vender una propiedad, ¿puedes ayudarme a crear un anuncio efectivo?"

- "Estoy buscando estrategias para optimizar mi página inmobiliaria en Google, ¿qué me recomiendas?"

- "Ayúdame a encontrar las propiedades más económicas para arrendar en Usaquén."

Data Sources and Processing

Data used to train and refine the model was gathered from various public sources and processed to ensure accuracy:

- Requisitos Compra/Venta and Requisitos Arriendo: These JSON files were created using publicly available information on property regulations in Bogotá, compiled and structured with the help of ChatGPT-4.

- Inmuebles Bogotá and Inmuebles Disponibles para Arrendar: Sourced from "Datos Abiertos Colombia," these datasets include details on property types, descriptions, and pricing, with necessary format adjustments made using Tableau Prep and custom Python scripts for accurate analysis.

Example of Data Processing

For the Inmuebles Bogotá dataset containing house prices in Bogotá for 2024. Several Tableau techniques were employed, including Data Transformation to adjust the data structure as needed. Specific tasks included Removing Duplicates to eliminate redundant entries and Handling Null Values to address any missing data. Additionally, operations like Splitting and Merging Fields were performed to reformat and optimize the data for subsequent analysis.

As shown in the video, a Calculated Field was created using a formula to correct the price format issues. Originally, prices that should have been in millions were misrepresented; for example, 23 was displayed instead of 230 million pesos, and values in billions of pesos (e.g., 2,300,000,000) appeared incorrectly as 2.3. The calculated field adjusted these values to reflect the correct monetary amounts, ensuring that the data was accurately represented for analysis.

For the "Inmuebles_Disponibles_para_Arrendar" dataset, a Python code was used to correct address formatting and extract neighborhood information:

- Address Format Correction: The dataset Inmuebles_Disponibles_para_Arrendamiento_con_barrios initially contained addresses in a non-standard format, which made it difficult to extract neighborhood information. To resolve this, a Python script was developed to:

- Standardize Address Terms: Replace abbreviations like "CL" and "KR" with their full forms "Calle" and "Carrera."

- Combine Street Numbers: Merge separated street numbers into a standardized format, ensuring consistency.

- Add Location Context: Append ", Bogotá, Colombia" to each address to provide clear context for geocoding.

- Neighborhood Extraction: Once the addresses were standardized, the next step was to extract the neighborhood (or "barrio") information. This was done by:

- Geocoding: Using the Nominatim service from the

geopy library, each corrected address was geocoded to obtain latitude and longitude.

- Reverse Geocoding: With these coordinates, a reverse geocoding process was used to retrieve detailed location information, specifically focusing on identifying the neighborhood name.

- Handling Errors: If the neighborhood couldn't be identified, a default response "Barrio no encontrado" was used, and any errors during processing were caught and logged for review.

- Data Output: After processing the data, the cleaned and enriched dataset, now including a corrected address and neighborhood information, was saved to a new CSV file. This file was then used by the GPT model to provide accurate property evaluations based on the location.

SEO Optimization and A/B Testing

To further enhance the capabilities of the model, it was trained to assist with SEO optimization and A/B testing strategies. This involved the following steps:

- Data Collection: The model was trained using public information on SEO best practices and A/B testing methodologies.

- API Integration: The model was connected to the Google Keyword Planner API to access real-time data and generate optimized keywords for content targeting.

- Content Recommendations: Based on the data from the keyword planner, the model provides recommendations for optimizing website content and generating A/B test variants to improve user engagement and conversion rates.

Demostration

Want to see the instructions given to the GPT, the JSON file to connect to the Google Ads API, and the code for cleaning the "Inmuebles_Disponibles_para_Arrendar" dataset? Click here.